Projects

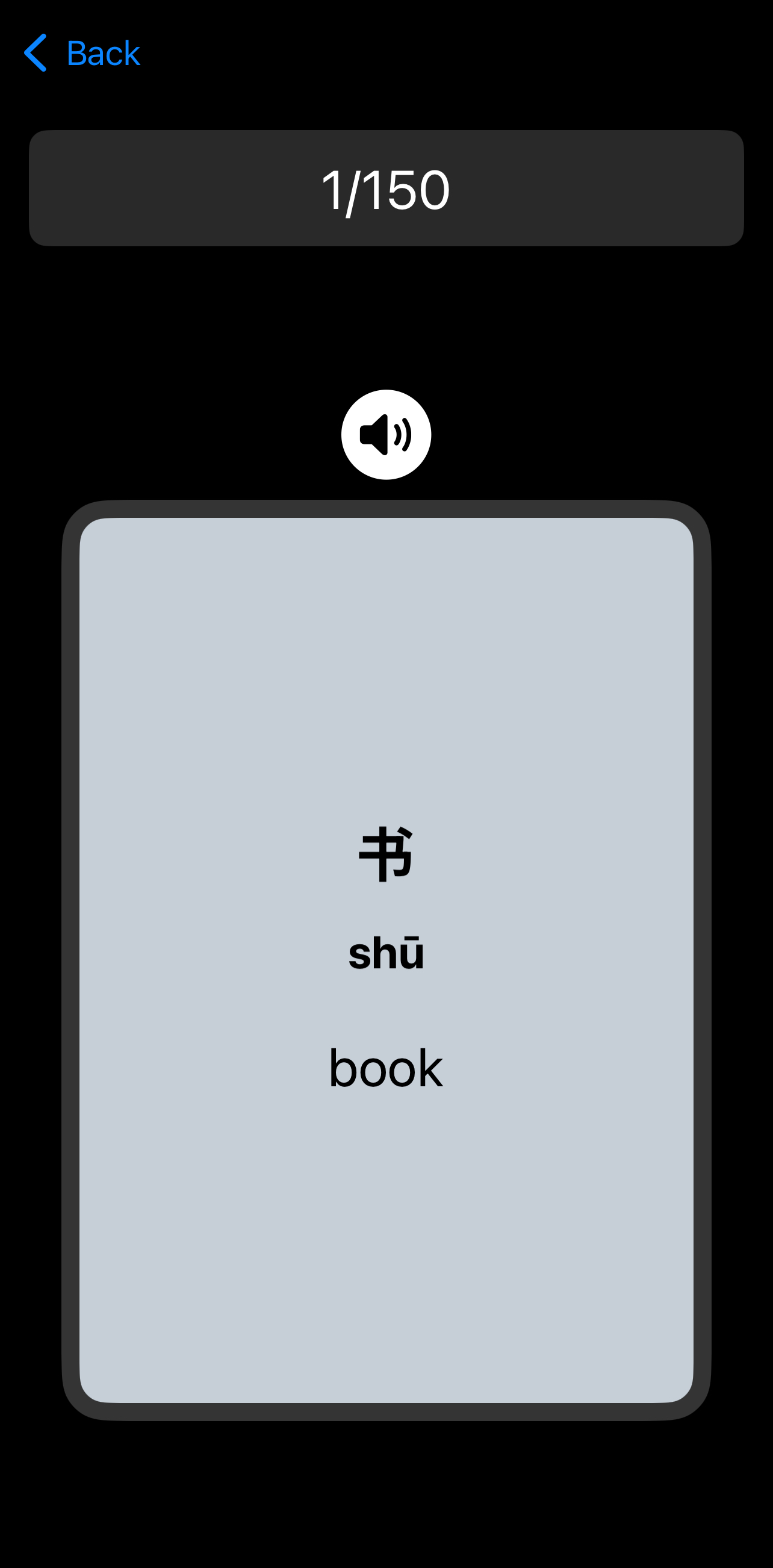

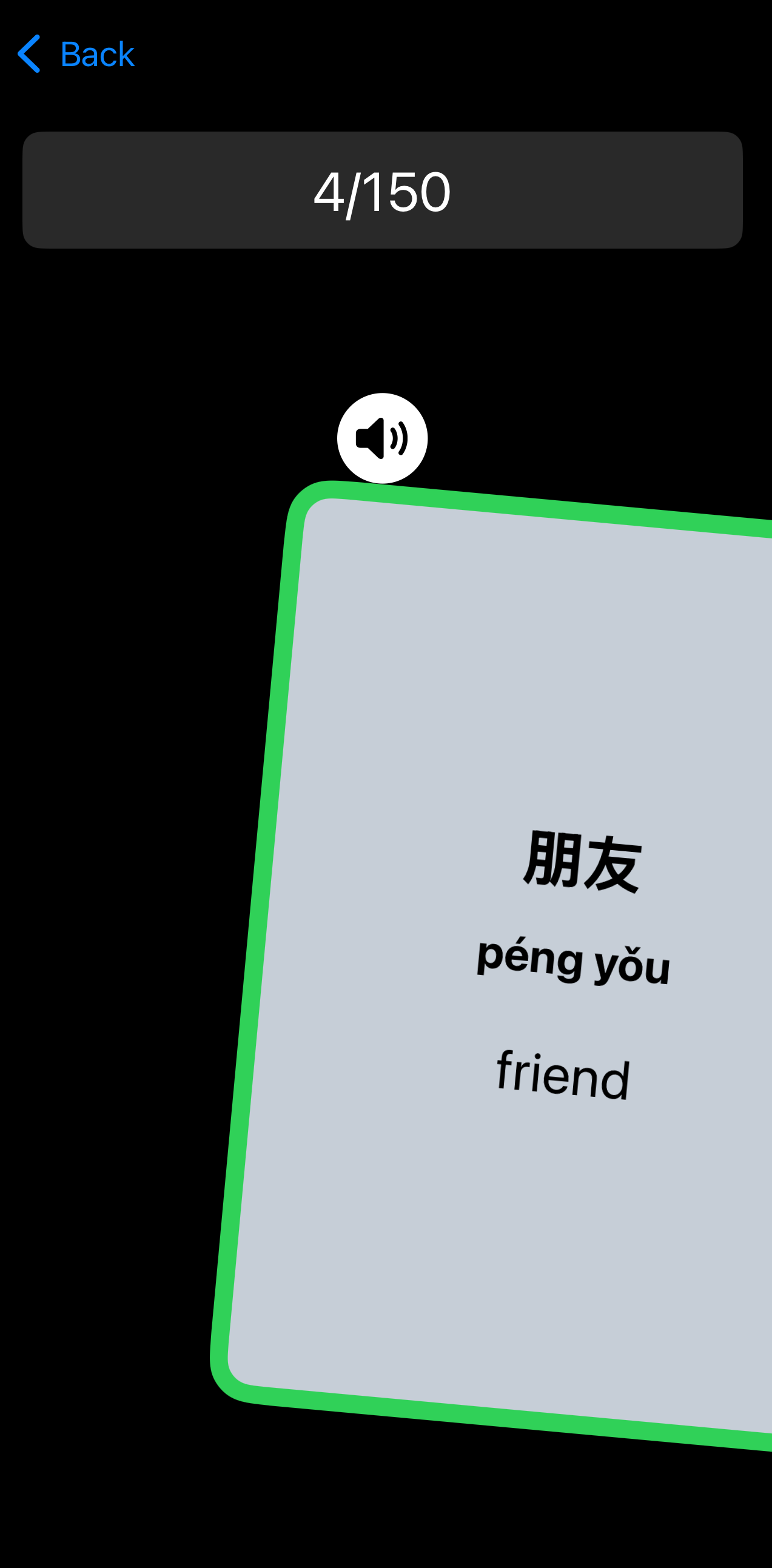

Interactive Chinese Flashcards with Audio

(In Progress)

Swift

I wanted to learn Chinese vocabulary, but I struggled to remember the words. When I searched for flashcard apps on the App Store, I found that most of them were either paid or lacked the option to add cards in bulk—I would have to enter each word one by one. So, I decided to take this opportunity to learn both Swift and Chinese at the same time by creating my own app!

I designed my app to be enjoyable and easy to use. When a user remembers a word, they can swipe right, and the card turns green; if they don’t remember it, they swipe left, and the card turns red. I didn’t stop there—I also added a feature where users can click a speaker icon to hear the pronunciation. Currently, it uses Apple’s native text-to-speech, which isn’t perfectly accurate, so in the future,I plan to upgrade it using Azure’s API for both text-to-speech and pronunciation scoring, helping users hear more accurate pronunciations and get feedback on their own speaking. This will make learning even more effective!

Robot Collect Coins Reinforcement learning

(In Progress)

C# + Unity

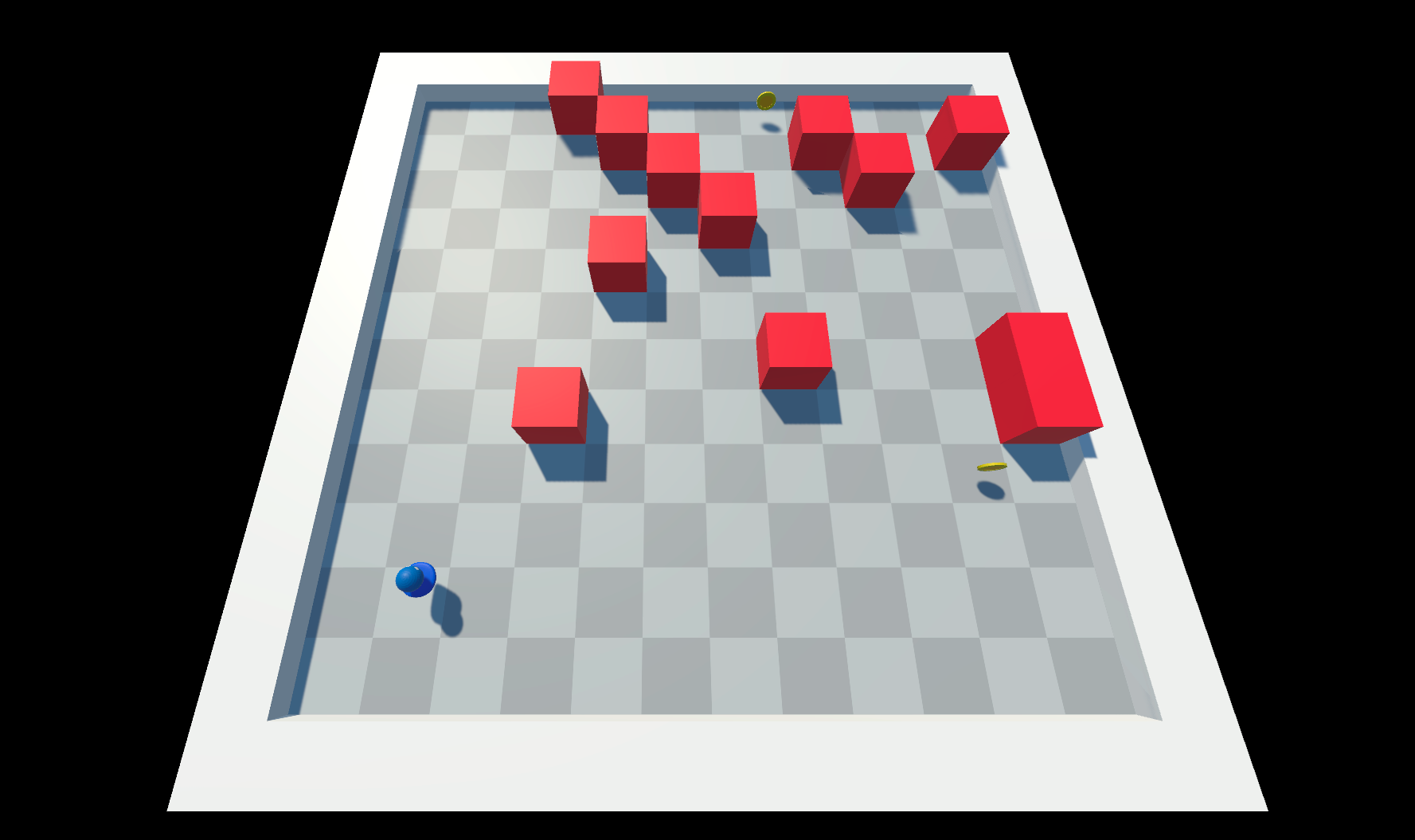

This is my first reinforcement learning project, and I'm still learning the ropes, but I’m really enjoying it—this has been one of the most fun projects I’ve worked on! I'm using Unity’s ML-Agents library to train a robot to collect coins, which involves three core concepts: environment, actions, and rewards.

- Environment: The robot perceives its surroundings, like its position, rotation, and the location of the nearest coin.

- Actions: Actions can be continuous (e.g., controlling movement along the x and z axes or adjusting speed) or discrete (e.g., turning left, moving forward, or turning right). Each action helps the robot make decisions based on its environment.

- Rewards: Rewards are points given to the robot for completing tasks, such as reaching a coin or moving closer to it. Negative points are given for touching walls or running out of time.

Right now, I’m in a trial-and-error stage, tweaking parameters and learning a lot along the way. I get very excited every time my robot seems to get smarter with each experiment!

Follow My Progress on GitHub

Smart Parking Spot Detector with OpenCV

OpenCV + Image Processing

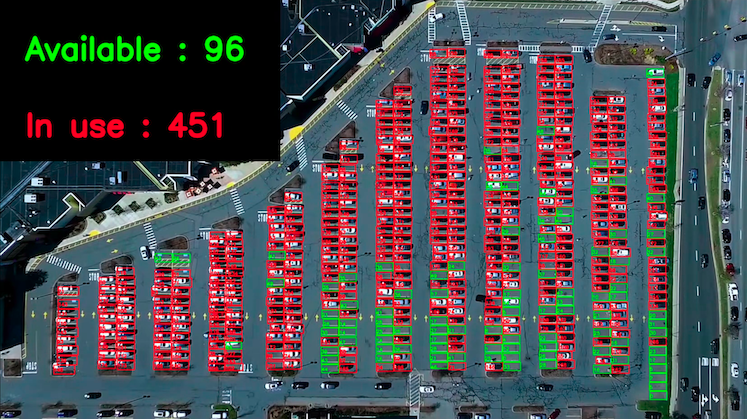

This project is designed for use at SKE2024 (Suankularb Exhibition 2024).

To create it, I started by using Canva to highlight each parking spot with a white box and make the rest of the background black. Then, I used thresholding to convert each video frame into a binary image, separating cars from the road. This part took a lot of fine-tuning to get right.

Next, I applied contour detection to identify the parking spot boxes based on the image I created in Canva. For each box, I calculated the average color to determine if it was mostly black (indicating a car was present) or lighter (indicating the spot was empty).

The system works but needs some improvement. The video doesn’t stay fixed in the exact same position throughout, which sometimes causes errors. Also, white cars and damaged road surfaces sometimes interfere with the binary image, making detection less accurate.

Sorting Algorithm Visualization

C++ + OpenCV

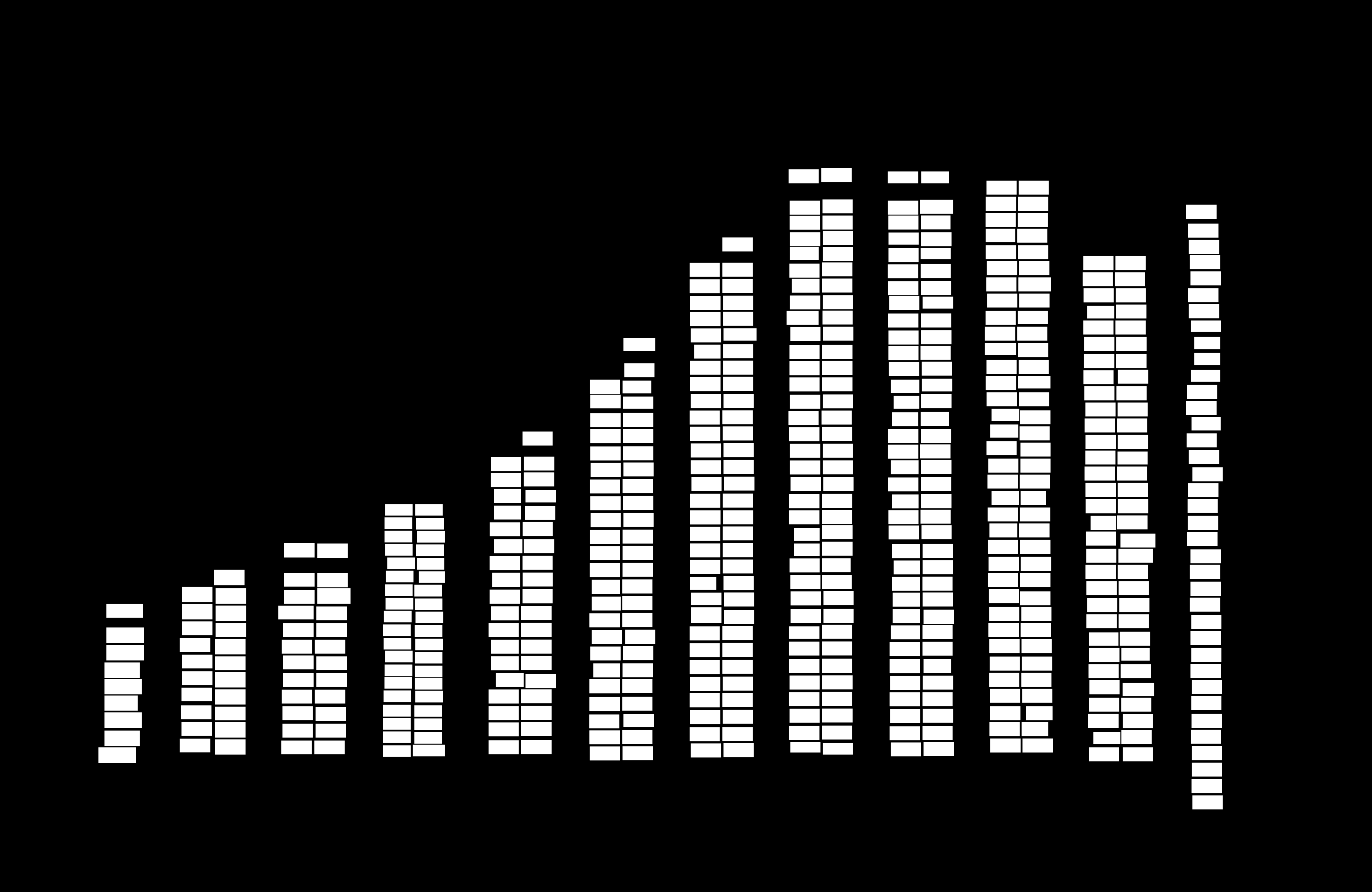

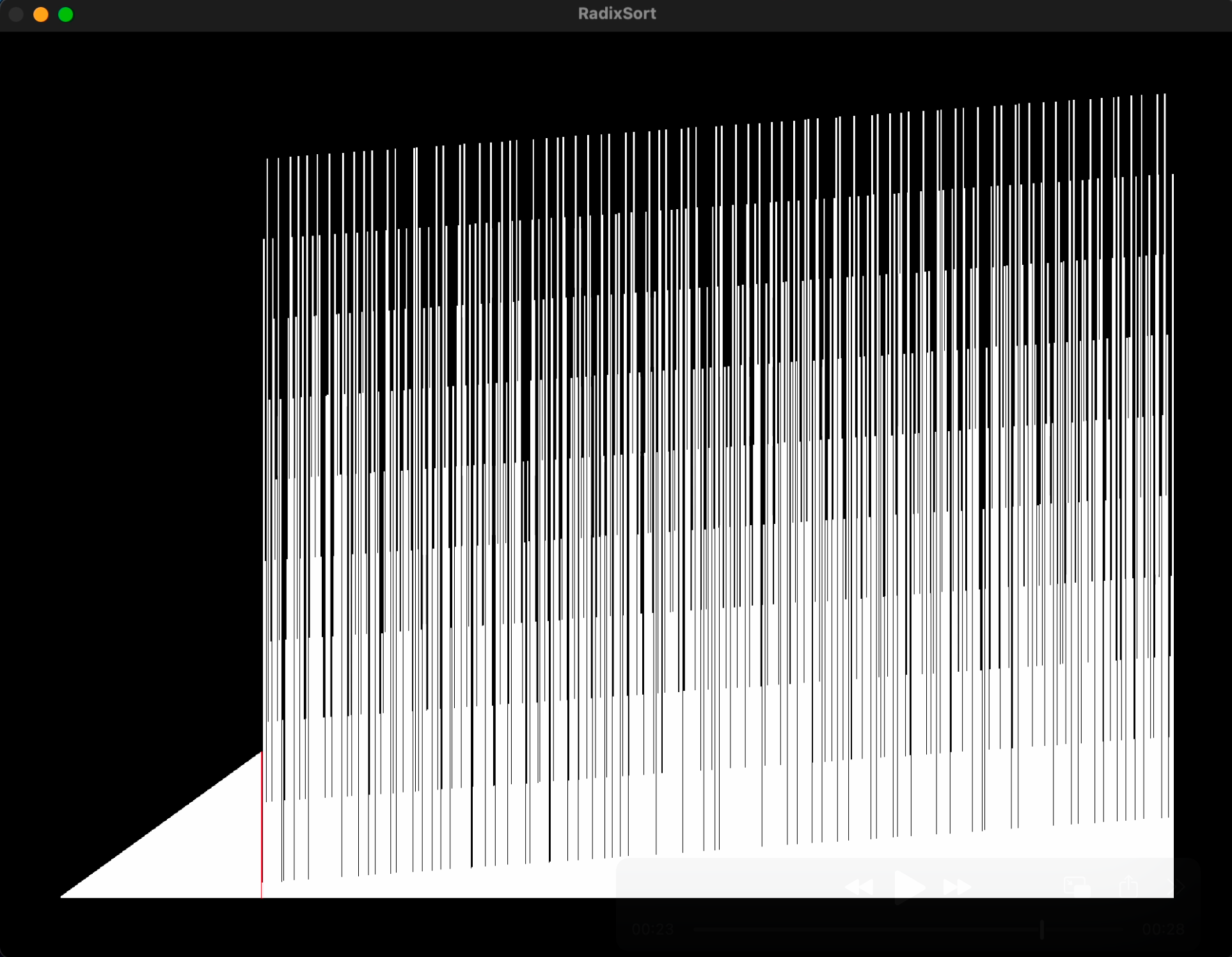

I've been working with OpenCV for a while, so I decided to try it out in C++. Initially, I thought I’d need some advanced library to handle visualizing thousands of elements in each frame. I underestimated the power of C++! It turned out OpenCV could handle it smoothly.

To build this project, my first step was figuring out how to display rectangles on the screen. I created a function to define each rectangle’s starting position (x, y) and size. Then, I made another function to render the rectangles in a graph-like way, taking parameters for the number of rectangles to be displayed.

Once I could render an array visually, I moved on to implementing sorting algorithms. By using my custom functions to render each frame, I brought the sorting process to life! Now, the visualization shows each step of the sorting algorithm, helping me see the process clearly in real-time.

See It in Action on TikTok!

Radix SortMerge Sort

Gesture Archery

OpenCV + Mediapipe

I joined the DevCommu Computer Vision Camp, where they taught me a lot of things like OpenCV, Mediapipe, and other important skills. These skills became the foundation for many of my projects and competitions.

During the camp, I made a small project that counted how many times I lifted a dumbbell. I did this using a simple if-else statement. For my final project, I had to send in something I created, but I wanted to do something more challenging. So, I decided to make a bow-shooting game.

In this game, I track the center of my left and right hands to calculate the angle, and I draw a line to check if there's a target in the way. To shoot, you open your left hand—just like you would when shooting a real bow. To reload, you bring both hands together, like making a reloading motion.

It was a fun project, and it felt realistic, almost like you’re shooting a real bow!

Watch me play the Game!

Virtual Touch

Mediapipe + PyAutoGUI

This is a project I made back in 2022 for the Suankularb Open House. In this project, I used OpenCV to open the webcam and Mediapipe to track the position of my hand and fingers. Then, with the help of PyAutoGUI, I could control the screen. At that time, I set it up so it could only click and scroll up and down.

On the Open House day, I showed this project to my juniors, and they were all really excited to try it out. It made me so happy to see them enjoying it! Some of them even called me “Iron Man,” which was so cool. I let them use it to scroll up and down in MS Word, and they were amazed at how it worked.

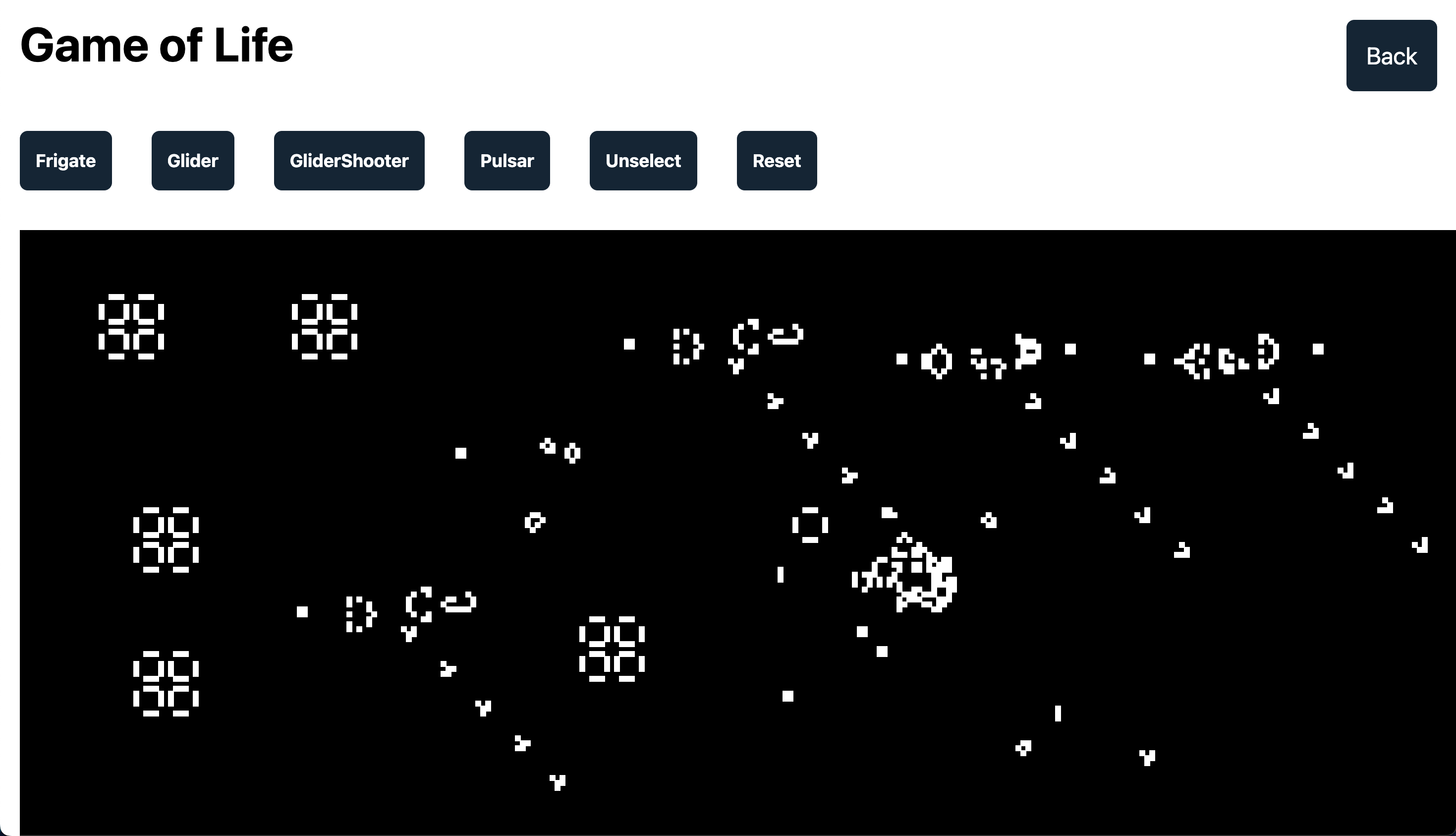

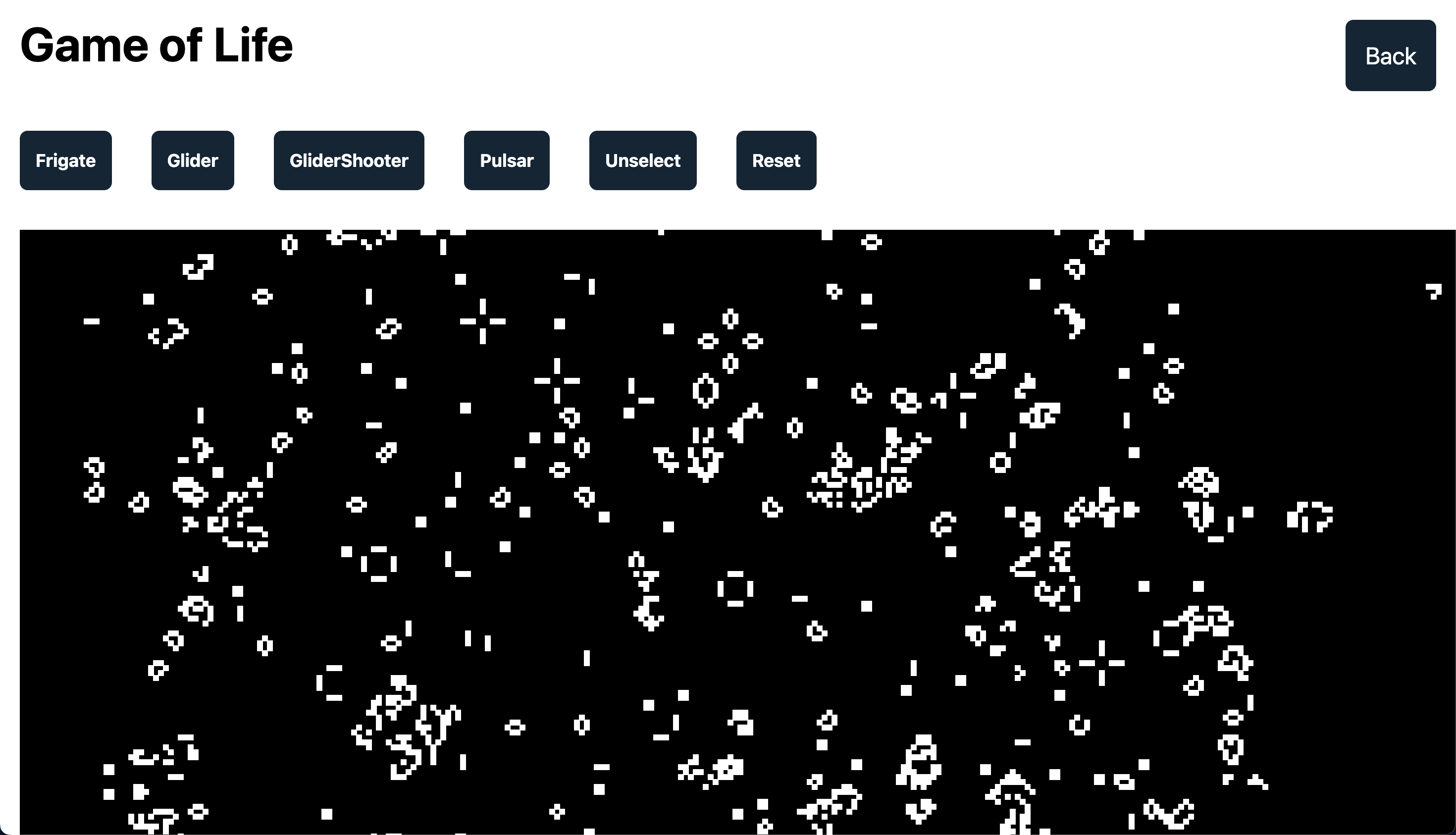

Game Of Life

JavaScript

This is my very first web project. One day, I came across a video about the Game of Life on YouTube, and I thought it would be really cool to make it myself. I started researching on Google and found that you could create it as a website, so I followed a tutorial to understand the basics.

The main idea is pretty simple: you use a loop to go through a 2D grid (like a checkerboard) and check the conditions to see which cells should live or die. Once I got the basic game working, I added some buttons to let you place special patterns, like the Frigate, Glider, Pulsar, and Glider Shooter. These patterns help you see how different setups evolve over time, making it even more fun to watch.

Overall, this project was really fun to work on and is exciting to watch as the patterns come to life. I think it’s a great beginner project, and I’d recommend it to anyone new to web development. It’s easy to understand, fun to build, and you learn a lot along the way!